While working on developing a graphics engine for video games, development was driven by immediate needs, schedules, release plans and available resources, most notable time, money and people. Now that I have more time to carefully think about graphics on my own time, and seeing all the different directions that next-gen technology seems to be presenting for the future of real time graphics, I'm still trying to figure out, in a wild exercise of fortune-telling, how are games supposed to look in the next few years, and what should we developers focusing on in order to make these next-gen techniques possible.

Even trying to classify all the different areas of research and development in computer graphics seems now like a titanic task, and catching up with all current and future developments slightly less than an utopia. Simply put, there is too much information to process for single individuals, and I can imagine technical directors in major development studios spending a lot of time just staying up to date with cutting edge technology. That's probably why it looks almost impossible to be a guru of everything that has to do with graphics these days, and still cope with milestones, unless you're one of the lucky guys who gets paid to do research and development, and enlighten the graphics community after that, either in papers and conferences, or by helping create the next stunning-looking blockbuster that everyone will be learning from (ie. copying) for the next few years. To be honest, I hate those guys as much as I admire them.

In this blog, I want to try and summarize the buzz regarding modern techniques that seem to be breaking the ground as of these days, and how these techniques could evolve into the graphics of the next generation, and maybe the one after that.

Geometry

the amount of geometric detail that modern hardware is able to handle is growing over the years, thus granting the artists the ability to tessellate their finely-modeled props and characters as much as they want to, leading to characters with as much as 10.000, 20.000, 100.000 polygons each easily. At the same time, Bump-, Normal-, Parallax- and general Relief-maps allow adding the fine detail that plain geometry isn't able to achieve. There doesn't seem to be much room for improvement here, at least when it comes to static, predefined geometry - but of course there is everything to say on dynamic geometry, including adaptive tessellation, constructive geometry, deformations and fractures, etc. Several technologies have already been introduced to dynamically create, deform, break or otherwise modifying geometry both in CPU and GPU.

The only limitations so far are due to the throughput required to push dynamically generated geometry to the GPU, but using GPU-based techniques, that shouldn't be such a huge problem in a future. Now it's time to move from polygon-based geometry to volume-based matter, which will make bodies look more real, closer to their real-life counterparts, behaving as physically accurately as possible within computation limits. If there is to be real progress, next-gen models will run away from the metaphor of creating geometry as the surface enclosing the volume, instead modeling it as the actual piece of matter.

Or liquid. Let's just remember that simulating fluids in real time is the next big thing, and the day we're able to simulate the behavior of such substances in real time, there will be room for improving not only in graphics quality, but in gameplay too, allowing next and exciting environments to be explored, and used to challenge the player.

Materials

Just as geometry is gaining in detail and realism, so are textures and shaders. Many of the techniques that have been widely used in film production only differ from what's possible in real time by the texture detail, amount of texture layers and passes, and precision of lighting and shading algorithms. But new hardware is already allowing more and bigger textures, and more and more intricate shaders. There seems to be no limit to the amount of instructions that shader developers will be able to use in a future, and the techniques thus possible are simply impossible to foresee.

It follows logically that textures and shaders will evolve into accurately modeling the physical properties and appearance of matter instead of surfaces. Also, it is quite probable that textures will be more and more used to reflect not only the properties of materials, but their state too, creating more and more techniques that use textures as general-purpose buffers that will be used to hold the changes and perturbations of the matter to which they are applied. And volume textures (or their generalization) should become more and more standard, following the evolution of polygons into volumes.

Lights, Shadows, Volumes

Most of the buzz you'll find regarding shaders are different techniques to create convincing simulations of light environments, of all the fine interrelations of lights and physical media. shadowing, environment mapping, ambient occlusion, Radiosity, Ray tracing, are some of the names of the techniques that have been regularly used in both production and real time graphics. Most of them are convincing enough, but ultimately approximations based on several assumptions on perception. But now, it just seems that the complex way in which real light interacts with the real world (reflectivity, transparency, scattering, etc.) is the way to go.

The challenge of improving the quality of lighting and shadowing includes moving into volumetric rendering. This means that the air (or the particles in it) is now part of the equation, and that no lighting, no environment will look real enough if there is no feeling of density, of smoke, steam, dust. Every single particle in the air (or corresponding fluid or matter) will have to be part of the equation, otherwise the simplification will show. Techniques to simulate light shafts or volumetric scattering already exists, but they are still applied as an extension to the simple technique for lighting or shadowing, usually through faking volume and depth in a 2D space. When true volume rendering becomes widely available, maybe we'll be able to render volumes not as a function of the viewpoint, but as true 3D effect.

Oh, and of course, expect all of these techniques to be real time in nature. Pre-baking any form of lighting will be banned from development practices the minute geometry and materials fully enter the dynamic world.

Special effects

I would say that where videogames will excel in a future, will be in making it all look alive. When you combine all of the techniques aforementioned together, you have a perfect picture (or stereogram) of reality. But it's a picture anyway, and even though dynamicity will be a regular part of that simulation, it's in what happens during change, motion, life, that we recognize a world we can feel real, instead of just believable. The good thing with special effects is that thanks to Hollywood, we're already used to them, and seeing worlds explode or cities flooded is already part of the collective conscious. At the level of video games, even the simplest particle effects, impacts, explosions, atmospheric effects (rain, lightning, etc.) add several levels of depth to an otherwise empty (but nice-looking) world.

Special effects are improving in many different directions: there is a lot of them already, and several of them are combined into one big effect that behaves more realistic; they are less predictable, with the right amount of randomness expected; they accurately match the physical properties of the materials to which they are associated; and they leave their marks and traces in the world after fading out. The latter is an important quality of special effects that is not too usual nowadays but comes associated with the dynamic nature of next-gen graphics. That is to say, special effects are part of the visual representation of a synthetic world, not an addition on top of it.

In fact, I don't think there will be much difference between plain rendering and special effects, all being part of the same process. I mean, they are rendered through the same processes already, but effects will be part of the definition of digital matter, just as dust is part of a building when it collapses. Maybe effects exist only as a function of events, transitions and other types of processes. But they are part of the world on their own, and as such they should be part of the world definition. If we're able to teach a system to behave, just like nature does, it should be easier to create the rules to describe what's expected of the components of that world, and maybe some unexpected behavior will emerge naturally.

The lesson learned from recent movies where CGI interact with real images in a way that makes it difficult to tell real from digital (see Transformers for an example) is simple: real human vision has already set the quality standards of what's expected from digital images. Of course that doesn't apply to non-photorealistic rendering, but faking reality in a computer is the most extended, and expected, way of graphics representation today, and the expectations of viewers will go up even higher. Maybe we could be fooled once with simple tricks, but no kid born in the digital era will be. In time, our ability to identify defects in synthetic imaging will grow, and by that time, all techniques that today are just convincing, will be simply not enough for the casual eye, not to speak of the expert one. The interactive nature of video games, and the necessary sense of immersion, makes it even more difficult, forcing us to create worlds instead of scenes, vision instead of graphics, and life instead of reality.

Friday, September 28, 2007

Thursday, September 27, 2007

Where were you in 1986?

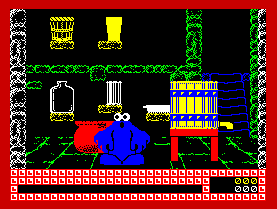

A long time ago, back when most computer games were sold in tapes, there was a game called The Trap Door. This might be an odd way to start a blog about next generation gaming, but please stick with me to see where this is going. The game was a typical puzzle-solving adventure where you had to perform several tasks for your boss, who had you trapped in a castle. Some elements of this game shock me even now: there were only 6 different screens, and there were 5 missions to solve using all the objects that were lying around in these few rooms, that included things as fun as boiling slimeys, or crushing eyeballs into juice (it was based on a children's show, you know). Every time Berk (the player) picked up some object, you could see him grabbing it from the ground, holding it in his hands, and dropping it when necessary. And in order to solve a mission, you needed to figure out the logic behind all objects just by paying attention at the screen, eg. in order to crush the eyes, you needed to use some small eyes as seeds, grow them into some eye plant, collect the big eyeballs, put them in some container, and crush them with the help of some nasty creature that came out of the trap door from the title.

A long time ago, back when most computer games were sold in tapes, there was a game called The Trap Door. This might be an odd way to start a blog about next generation gaming, but please stick with me to see where this is going. The game was a typical puzzle-solving adventure where you had to perform several tasks for your boss, who had you trapped in a castle. Some elements of this game shock me even now: there were only 6 different screens, and there were 5 missions to solve using all the objects that were lying around in these few rooms, that included things as fun as boiling slimeys, or crushing eyeballs into juice (it was based on a children's show, you know). Every time Berk (the player) picked up some object, you could see him grabbing it from the ground, holding it in his hands, and dropping it when necessary. And in order to solve a mission, you needed to figure out the logic behind all objects just by paying attention at the screen, eg. in order to crush the eyes, you needed to use some small eyes as seeds, grow them into some eye plant, collect the big eyeballs, put them in some container, and crush them with the help of some nasty creature that came out of the trap door from the title.I'm bringing this up because this game had some amazingly modern concepts in its game play. The idea of an interactive environment seems to be one of the cornerstones of next-generation gaming. But even more, what I like the most in The Trap Door is that there is an implicit language for players to learn and understand, that explains by itself the rules of the game, letting players try and use game objects as they like, only implicitly assuming that all of them will be of some use in some of the missions. But there is no HUD, no labels, no signals to show what every item does, or how it is used. Through a clever use of simple yet clear graphics, everything seems to announce what it is and what it is supposed to be used for. Comparing it to recent games with that kind of freedom taken to a much larger scale, eg. Oblivion, where apparently there are thousands of useless objects spread throughout the game - or Dead Rising, where all interactuable objects, even though it's apparent what can or can't be used, is labeled using a HUD- is proof of an amazing ability to interact with players in a smarter way, letting them learn the logic, instead of being constantly guided by lazy game designers.

I must admit I like being guided a little, at least at the beginning, but following a path that has been laid down for me to follow obediently is little less than being treated as a puppet, or a well-trained monkey. Even though actions scenes usually still take the type of ability that truly comes from eye-to-hand coordination, finesse and finely paced moves, when it comes to solving the story, too many games keep treating their players with a disdain for their ability to make their way through the game. Some people complain about those games which storytelling is basically an interactive movie where you get to move the character where he's expected to, and not much else. It's better to switch to a simple cutscene where the story is plain exposed. If the player isn't able to change the course of the story anyway, what's the point in asking him to go through a predefined set of movements or actions?

I must admit I like being guided a little, at least at the beginning, but following a path that has been laid down for me to follow obediently is little less than being treated as a puppet, or a well-trained monkey. Even though actions scenes usually still take the type of ability that truly comes from eye-to-hand coordination, finesse and finely paced moves, when it comes to solving the story, too many games keep treating their players with a disdain for their ability to make their way through the game. Some people complain about those games which storytelling is basically an interactive movie where you get to move the character where he's expected to, and not much else. It's better to switch to a simple cutscene where the story is plain exposed. If the player isn't able to change the course of the story anyway, what's the point in asking him to go through a predefined set of movements or actions?Next-gen gaming is all about choices. Letting players actively interact, and even change, the story, figuring out the non-player characters, not as a game abstraction, but in their true condition as allies, opponents, or plain witnesses. That's what next-generation gaming is supposed to be about, using next-gen technology (graphics, animation, physics) to increase the amount of believability of that story, those characters, those settings. One good thing about having all that computing power at hand is that it forces the game designer to think of more clever ways of challenging the player. The tricks that have been routinely used in the past should be banned and slightly fade into memory. More and more games are proposing truly next-gen game experiences, with or without the help of cutting-edge technology. After all, if The Trap Door did it back in 1986, it sounds simply stupid that a 21st century game designer isn't able to work that out.

Subscribe to:

Posts (Atom)